Artificial voices like Alexa and Siri might seem ubiquitous now, but they are just the latest development in a 200-year effort to synthesise human speech. Alex Lee, who is visually impaired, describes how the disembodied voices helping him with everyday tasks came into being.

The sweet sound of synthetic speech

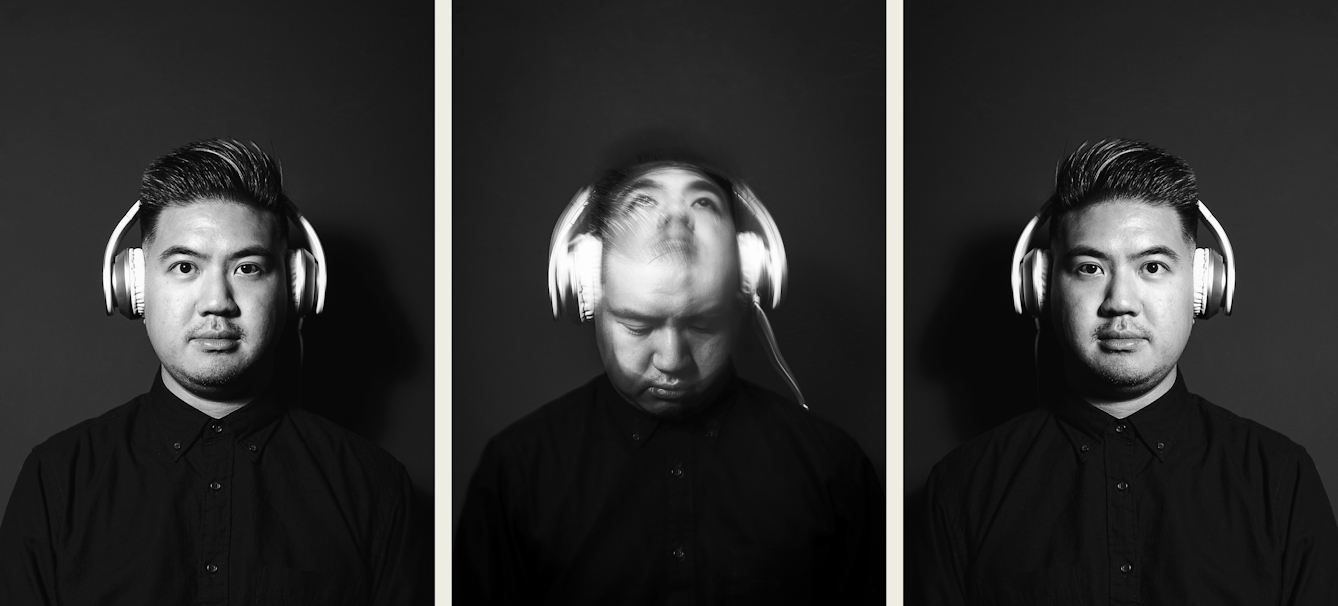

Words by Alex Leephotography by Ian Treherneaverage reading time 7 minutes

- Serial

A man is reading a lengthy news article to me. The cadence and pitch of his voice varies as he reads, emphasising certain words using his larynx. At the start of each sentence, and whenever he needs to pause, he takes a small breath and then carries on.

His name is Alex, and in his current form he doesn’t have a mouth or even a body. He’s 869 megabytes of synthetic speech downloaded onto my iPhone. He’s just one artificial voice out of several hundred, barking words into the ears of millions of people around the world. Without synthesised speech, I’d be lost. Babbling disembodied voices are the things helping me complete almost every single life task.

It’s not just blind people who rely on synthetic speech. Go into a friend’s kitchen or living room, and you’ll find it populated with smart speakers and talking gadgets powered by digital assistants like Alexa and Siri. But years before these robotic voices became a mainstay of modern life, artificial voices were already telling visually impaired people the time, reading documents for them, and helping them use computers.

Engineers have been trying to create synthetic speech for over 200 years, but until the 20th century, the voices could only form single vowel sounds or consonants. In 1928, Bell Labs inventor Homer Dudley created the Vocoder. The device reproduced human speech and compressed it into low-frequency electronic signals, much lower than a regular telephone, to reduce the bandwidth for long-distance calls.

Those signals were then translated on the other end using electronic filters into a harsh robotic voice that mimicked human speech. It was used in the Second World War to help scramble and secure calls between world leaders like Franklin D Roosevelt and Winston Churchill. It didn’t sound human at all, because it re-created speech, rather than sampling or recording it.

In the late 1930s Dudley unveiled a simpler version of the Vocoder called the Voder (Voice Operating Demonstrator), widely recognised as the world’s first true speech synthesiser. It looked like a piano and was used almost like an instrument. Vowel and nasal consonant sounds were produced by the clicking of a wrist bar that activated a hissing and buzzing oscillator, while the pitch was controlled using a pedal.

After two demonstrations at exhibitions and fairs in America, it was never seen again. “The Voder was really the very first electronic speech synthesiser that could create fully connected speech. It sounded really electrical and it did not sound human,” explains Mara Mills, Associate Professor of Media, Culture and Communication at New York University, who researches the history of assistive technology and speech synthesis.

“People who attended the World's Fair would just call out words and the person playing the piano would make any sound – any sentence – with it.”

“A man is reading to me. His name is Alex, and in his current form he doesn’t have a mouth or even a body. He’s 869 megabytes of synthetic speech downloaded onto my iPhone.”

But that first demonstration showed that it was possible to electronically mimic human speech. New developments followed, like the pattern playback in the late 1940s. Using this, Franklin Cooper and his colleagues at Haskins Laboratories in the US were able to convert spectrograms of sound back into speech.

The first screen readers

Speech synthesis evolved even further in the 1950s and 1960s, thanks to the use of resonant electrical circuits, and then integrated circuits and computer chips. Scientists were able to successfully mimic the way the human larynx made sound. Some synthesisers were made by prerecording certain phonemes and them stringing them together to make words and sentences – a technique called concatenative speech synthesis.

Robotic voices entered the mainstream through toys like the Speak & Spell and Radio Shack’s Vox Clock, and arcade games like Stratovox and Berzerk. While those uses were entertaining and even educational, it was the pairing of speech synthesis with reading machines that really changed the game for visually impaired people.

In the 1960s, computer scientist Ray Kurzweil was studying pattern recognition and optical character recognition (OCR), the conversion of printed text into machine-encoded text. At the time, machines could only read a couple of different fonts, but many different industries sought the technology. “People wanted mail sorters to work automatically so you wouldn't have to hire humans, and people also wanted to be able to deposit a cheque without a bank teller,” Mills says.

The following decade, Kurzweil met a blind man on an aeroplane. The two discussed the challenges he had reading printed material, and Kurzweil decided to make a reading machine for blind people that could read all kinds of text, incorporating a flatbed scanner and the robotic-sounding Votrax speech synthesiser.

Robotic voices entered the mainstream through toys like the Speak & Spell and Radio Shack’s Vox Clock, and arcade games like Stratovox and Berzerk.

“Without synthesised speech, I’d be lost. Babbling disembodied voices are the things helping me complete almost every single life task.”

In 1976, the bulky and eye-wateringly expensive Kurzweil Reading Machine was released, costing between $30,000 and $50,000. The machines sat in libraries and schools; only celebrities like Stevie Wonder could afford to own one outright.

Ted Henter, a computer programmer who grew up in Panama, lost his sight in 1978 after a car accident and remembers how bulky the machines were. “I used one a couple of times in a library. They were quite big – almost like a washing machine,” he says. His own first talking computer terminal was bought for him by the government in the early 1980s, and cost $6,000.

“It was just a terminal – it wasn't even what we think of as a personal computer. You actually had to take that terminal and plug it into a mainframe or minicomputer to do anything,” he says. “Very few people could afford it as individuals.”

Henter went on to create the screen reader JAWS (Job Access With Speech) – one of the first of its kind and arguably the most popular screen reader today. Early screen readers allowed visually impaired people to use their own command-line computers running Microsoft DOS. An external speech synthesiser read out the different textual elements on the screen. Without graphics, the text was easy to parse into speech.

Adding the human touch

The challenge came in the 1990s when companies moved over to graphical computers with Windows and mice, which didn’t work with existing command-line screen readers. “A lot of blind people were going to lose their jobs if somebody didn’t come up with a solution for Microsoft Windows,” Henter remembers. So in 1991, he and his team began developing a version of JAWS for Windows.

“The Windows product did take at least four years to develop – maybe five – and when we finally released it in January of 1995, you could read Windows with it, but it wasn't a terrific product, but it was better than whatever else was around.”

Since then, screen readers have improved exponentially, allowing people to use their phones, browse Instagram and listen to articles at extraordinary speeds. This is mostly done using formant synthesis, which replicates the sound frequencies humans make while remaining comprehensible. Most speech synthesisers still use concatenative synthesis, but more recently, speech synthesisers like Alexa and Siri have begun using artificial intelligence and neural networks to speak words with inflection based on context.

Visually impaired people now have a choice of how they want their screen reader to sound. Speech synthesisers have never sounded more human. And with voices like Alex, they’re even synthesising breathing.

“All of this is totally mainstream now,” says Robin Christopherson, Head of Digital Inclusion at technology-access charity AbilityNet, and a screen-reader user himself. “But it’s because of the needs of the disabled community that they were developed in the first place.” Without the work of blind pioneers like Henter, we wouldn’t have the high-quality speech synthesis that everyone enjoys today.

About the contributors

Alex Lee

Alex is a tech and culture journalist. He is currently tinkering with gadgets and writing about them for the Independent. You may have previously seen his work in the Guardian, Wired and Logic magazine. When he’s not complaining about his struggles with accessibility, you’ll likely find him in a cinema somewhere, attempting to watch the latest science-fiction film.

Ian Treherne

Ian Treherne was born deaf. His degenerative eye condition, which by default naturally cropped the world around him, gave him a unique eye for capturing moments in time. Using photography as a tool, a form of compensation for his lack of sight, Ian is able to utilise the lens of the camera, rather than his own, to sensitively capture the beauty and distortion of the world around him, which he is unable to see. Ian Treherne is an ambassador for the charity Sense, has worked on large projects about the Paralympics with Channel 4 and has been mentored by photographer Rankin.